Publication trimestrielle du Laboratoire

d'analyse et d'architecture des systèmes du CNRS

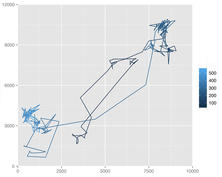

The global objective of this research addresses the cooperative visual mapping and localization problem for multiple vehicles, moving on a priori unknown routes in a convoy formation. A large-scalemapping approach and a map-based localization method are combined to achieve cooperative navigation. The mapping approach is based on hierarchical SLAM -global level and local maps-, which is generalized to be executed on multiple vehicles moving as a convoy. A global 3D map maintains the relationships between a series of local submaps built by the first vehicle of the convoy (leader), defining a path that all other vehicles (followers) must stay on. Only single camera setups are considered. The world is assumed static, but with moved objects between two. The robotics equipped with low cost proprioceptive sensors (inertial) or GPS in order to compute an initial coarse estimate of its position, and with cameras (monocular or stereo vision) in order to acquire image sequences and to extract and characterize 3D features from the perceived scenes. These 3D landmarks will be tracked and identified between successive images acquired during robot motions, making the robot able to locate itself accurately. On the other hand, this work describes a method proposed for detection, tracking and identification of mobile objects, detected from a mobile camera, typically a camera embedded on a robot. A global architecture is presented, using only vision, in order to solve simultaneously several problems: the camera (or vehicle) Localization, the environment Mapping and the Detection and Tracking of Moving Objects. The goal is to build a convenient description of a dynamic scene from vision: what is static? what is dynamic? where is the robot? how do other mobile objects move? It is proposed to combine two approaches, first a Clustering method allows to detect static points, to be used by the SLAM algorithm and dynamic ones, to segment and estimate the status of mobile objects. Second a classification approach allows to identify objects of known classes in image regions. These two approaches are combined in an active method based in a Motion Grid in order to select actively where to look for mobile objects. During this work I have worked in close collaboration with Thales Optronique S. A (France) and Lasmea Laboratory in Clermont-Ferrand (France), my work has been performed in the context of the RINAVEC project funded by ANR, the French Association Nationale de la Recherche